Introduction

Power budgeting is the dominant constraint for autonomous robots that are expected to operate for long periods without human supervision. This constraint becomes even more pronounced when a robot is expected to perform on-device machine learning or perception, such as basic camera-based vision.

This lab note focuses specifically on microcontrollers capable of running TensorFlow Lite Micro or similar embedded machine learning (ML) frameworks. As a result, the scope is intentionally limited to microcontroller units (MCUs) with sufficient on-chip RAM or external PSRAM to support perception workloads. There are many microcontrollers on the market, but most are optimized for simple control tasks rather than memory-intensive inference. This article concentrates on a subset of popular, well-supported MCU families that are already used in robotics and embedded ML, and evaluates them through the lens of energy discipline rather than peak performance.

Power Budges in ML-Capable Robots

In robots that perform perception, power consumption is shaped less by raw compute and more by how memory and wake cycles are managed. Image buffers, tensors, and intermediate activations require space, and moving that data consumes energy. For solar-assisted or battery-limited robots, deep sleep current and wake latency dominate total lifetime energy usage, even when ML inference is involved..

A usable power budget is therefore not a single number. It is a behavioral envelope that determines how often a robot can sense, how much it can perceive, and how quickly it must return to sleep.

ESP32-S3 and Related ESP32 Variants

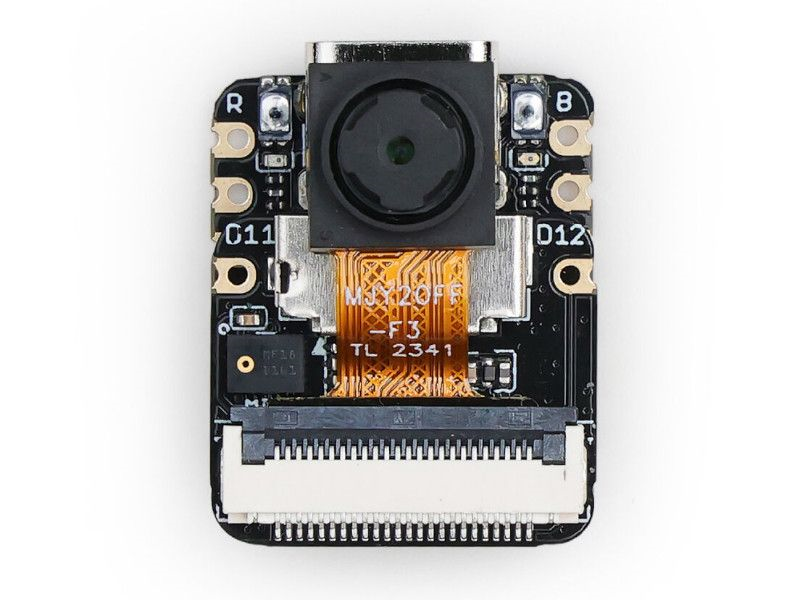

The ESP32-S3 is one of the most commonly used microcontrollers for embedded ML and perception in small robots. Its defining characteristic is the availability of external PSRAM on many modules, typically in the range of multiple megabytes. This memory enables frame buffers, modest convolutional models, and more flexible vision pipelines than most MCUs can support.

TensorFlow Lite Micro is well supported on the ESP32-S3, and camera interfaces are mature. From a capability standpoint, this platform offers one of the easiest paths to on-device vision without an SBC. The tradeoff is power behavior. Active current is higher than simpler MCUs, and the presence of radio subsystems means that energy efficiency depends heavily on correct configuration. Deep sleep modes are effective, but achieving low sleep current requires deliberate software control.

In practice, the ESP32-S3 works best when perception is bursty and intentional. It is capable of more than most MCUs, but it demands discipline to remain energy efficient..

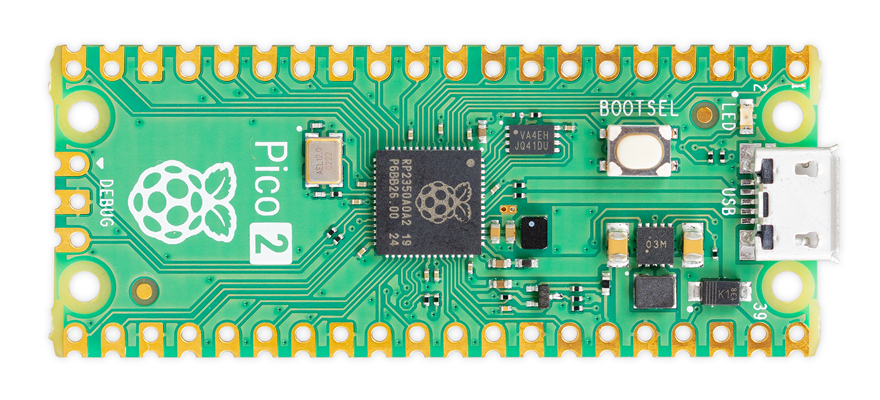

RP2350 and the RP2040 Lineage

The RP2350 builds on the Raspberry Pi RP2040’s strengths while addressing some of its memory limitations. These devices emphasize deterministic behavior, simple power states, and predictable timing. Increased on-chip SRAM makes limited ML and perception workloads feasible without external memory, though the absence of native PSRAM still places firm boundaries on model size and image resolution.

TensorFlow Lite Micro can run on RP2350-class devices, but models must be carefully designed to fit within memory constraints. Simple grayscale image processing, sparse feature extraction, and lightweight classifiers are realistic. Full-frame vision pipelines are not.

The advantage of this platform lies in its predictable power profile. Deep sleep behavior is reliable, wake times are fast, and frequent short sensing cycles are easy to implement. For robots that prioritize survival and autonomy over perception richness, RP2350-class devices offer a compelling balance.

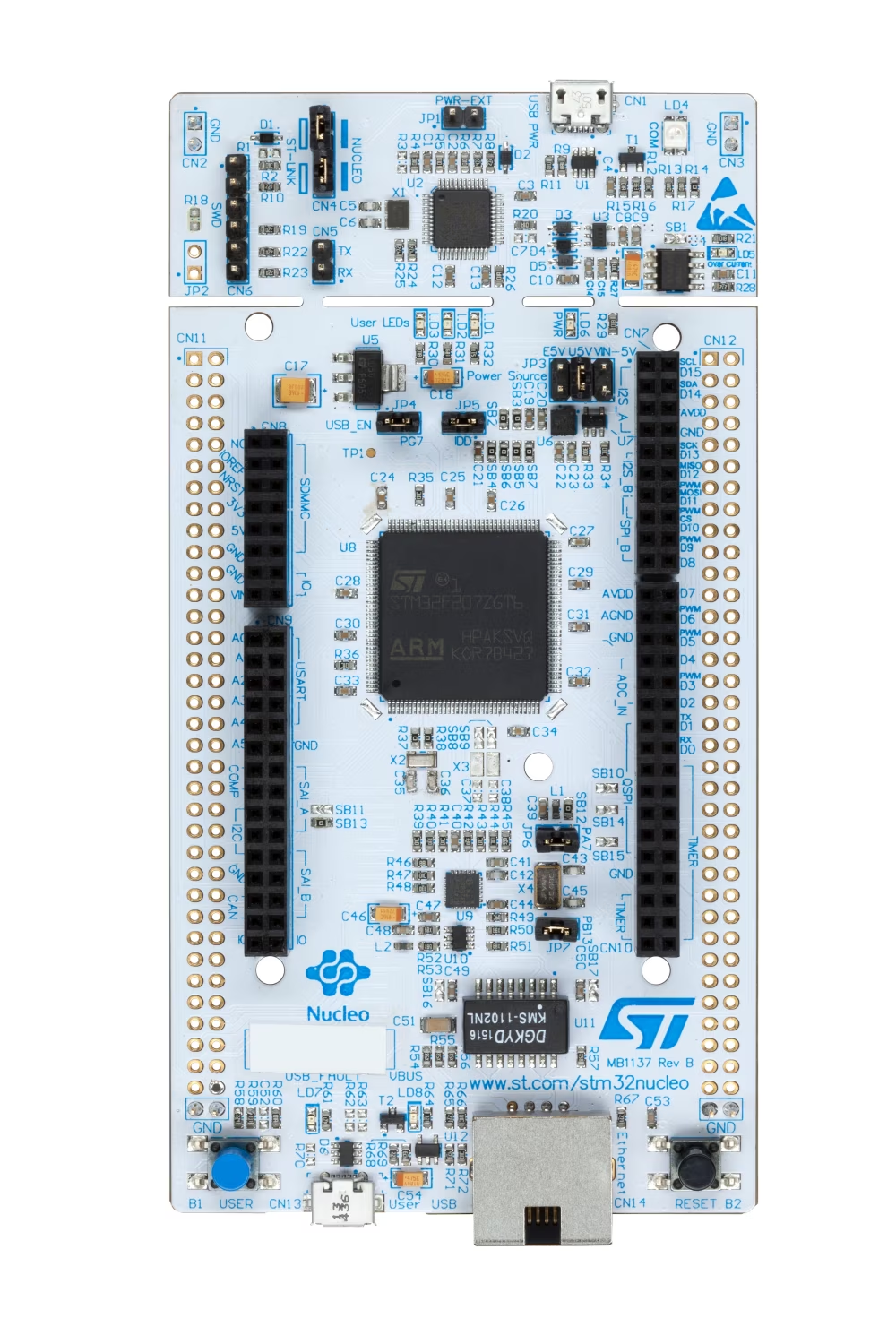

STM32 Microcontrollers with TFLite Support

Several STM32 families support TensorFlow Lite Micro and are commonly used in embedded AI applications. These devices are known for strong low-power performance, stable sleep modes, and broad peripheral support. For robots that emphasize control, sensing, and long idle periods, STM32 ultra-low-power lines perform exceptionally well.

Memory is the primary constraint. While some STM32 variants provide substantial on-chip SRAM, native PSRAM support is uncommon. This limits the complexity of perception pipelines and often confines ML workloads to classification, sensor fusion, or signal processing tasks rather than full camera-based vision. Toolchains and integration are powerful but can be more complex, which affects suitability for learning-focused robots.

STM32 devices excel when energy predictability and reliability matter more than maximum ML capability.

Other TensorFlow Lite–Capable MCUs

A small number of additional MCU families are capable of running TensorFlow Lite Micro, but are less central to our current design space. Nordic’s nRF52 devices offer excellent low-power characteristics and can support small ML models, but limited memory restricts vision workloads. The Kendryte K210 includes dedicated AI acceleration and comparatively large memory, but higher power draw and weaker low-power modes make it less suitable for long-lived autonomous robots.

New AI-focused microcontrollers continue to appear, often advertising impressive inference performance. Many of these platforms lack long-term supply stability, documentation, or mature tooling, which makes them risky choices for learning kits and early robotics platforms.

Across all ML-capable microcontrollers, memory consistently emerges as a hidden energy cost. Larger buffers increase idle current, lengthen wake times, and amplify data movement overhead. A robot that allocates memory aggressively may consume more energy overall than one that runs simpler algorithms more frequently.

Effective power budgeting therefore treats memory as an explicit design decision rather than a passive resource.

Implications for Autonomous Robot Design

Microcontroller choice directly shapes robot behavior. It determines how often perception can run, what type of perception is feasible, how failures are detected, and how the system recovers. For robots intended to coexist quietly with humans, autonomy is achieved not through raw compute, but through restraint, timing, and energy awareness.

This lab note reflects current observations across a subset of popular, TensorFlow Lite–capable microcontrollers. It is not exhaustive, and it is not final. As hardware and tooling evolve, these tradeoffs will change. Power budgets remain a living design decision, and autonomy emerges from systems that understand when to act and when to sleep.